# Challenge 3: Storage Account - Creating Data Storage for VMs and Applications

# Here is what you will learn 🎯

- how to create an Azure storage account using the portal for the use as a 'cloud file share'

- enable 'secured' access to the storage account data (e.g. blobs) using Shared Access Signatures

- create a SAS policy to enable permission modification after SAS tokens have been issued.

- use AAD credentials as 'better' way for storage account authorization

- create a file share and attach it to your Azure virtual machine

# Table of Contents

- Create a storage account and a container using the Azure portal

- Authorize access to blobs using AAD (optional)

- Add an Azure file share to a server

- Cleanup

# Create a storage account and a container using the Azure portal

Login to your Azure subscription and search the marketplace for 'Storage Account'

Create a storage account with the following values:

Name Value Resource Group (new) rg-sachallenge Storage account name %unique lowercase value% Location West Europe Performance Standard _Replication: Locally-redundant storage (LRS) Access tier Hot TIP

📝 Find some use cases for [SAS](https://docs.microsoft.com/azure/storage/common/ storage-sas-overview#when-to-use-a-shared-access-signature)

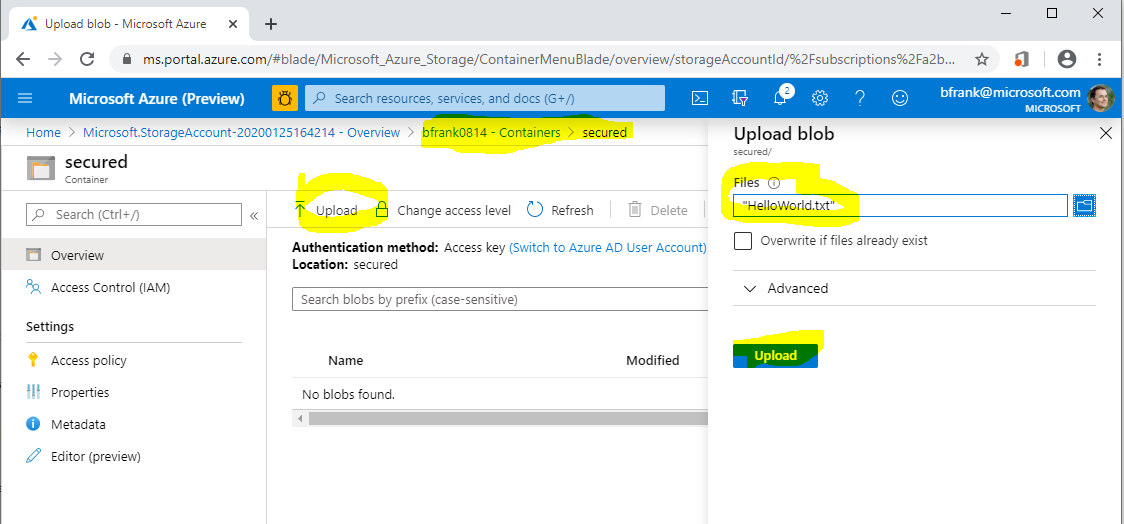

Once the storage account is created, generate a new container within the storage account:

[Azure Portal] -> %Your Storage Account% -> Containers -> "+ Container"Name Value Name secured Public access level Private (no anonymous access) Now upload a small file:

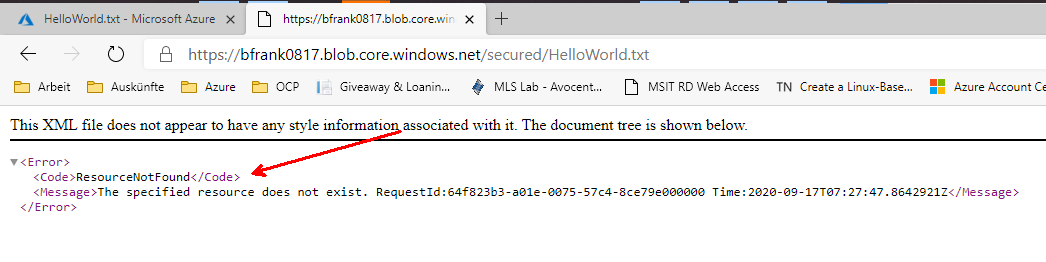

❔ Question: Can you download the file using its URI in another browser session?

Answer: No. Because anonymous access is not allowed and this URI does not contain any auth token

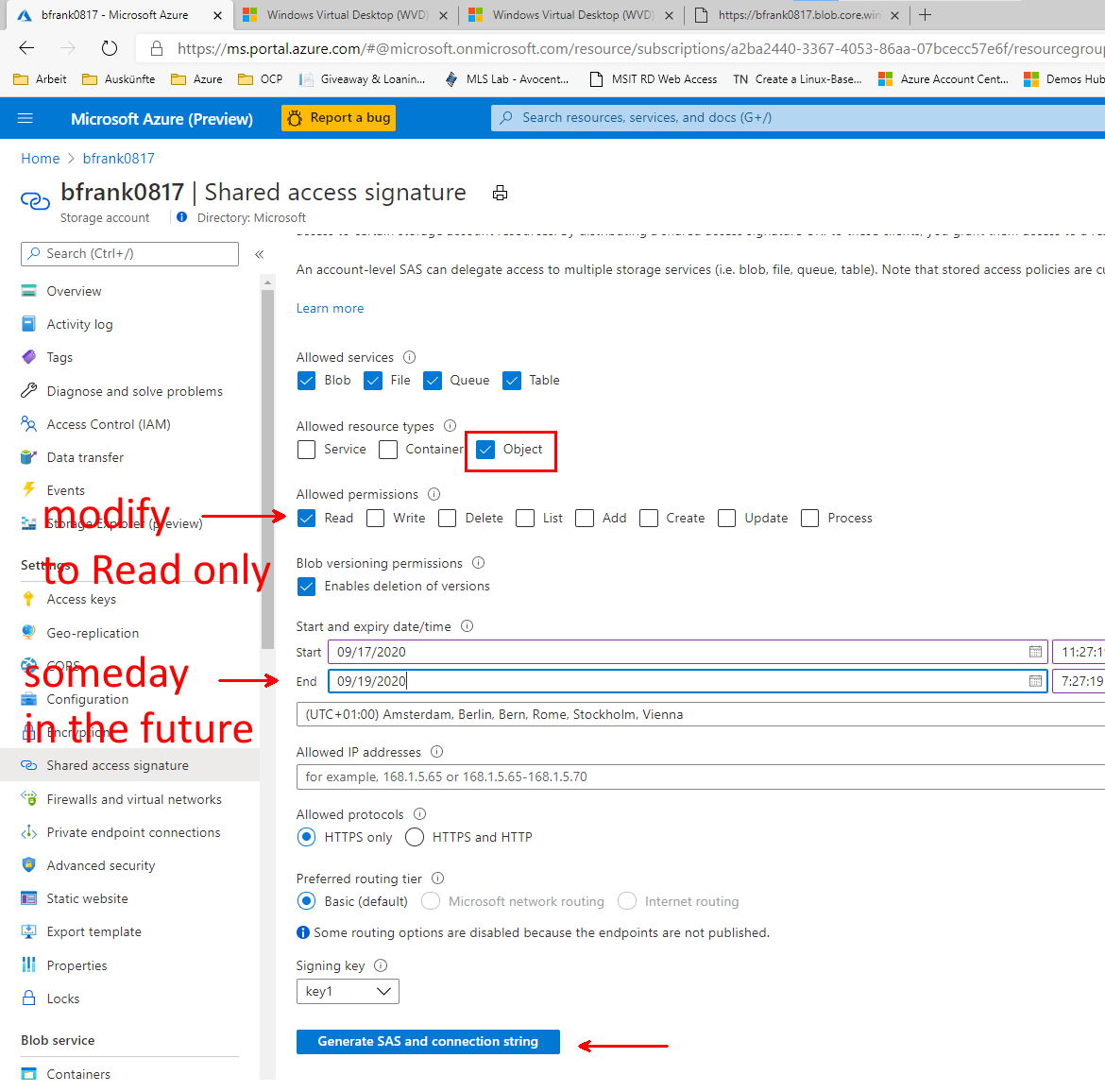

Create a Shared Access Signature via the portal to enable blob access:

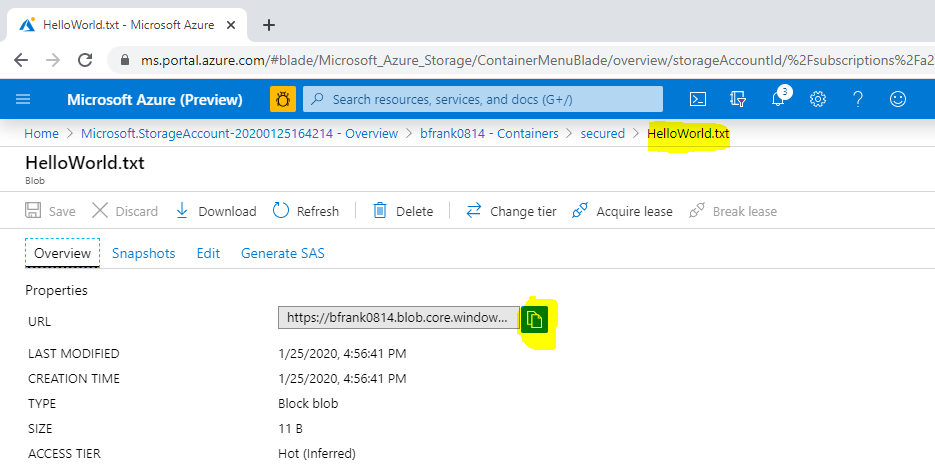

[Azure Portal] -> %Your Storage Account% -> Shared access signature -> "Generate SAS and connection string"Copy the Blob Service SAS URL to the clipboard

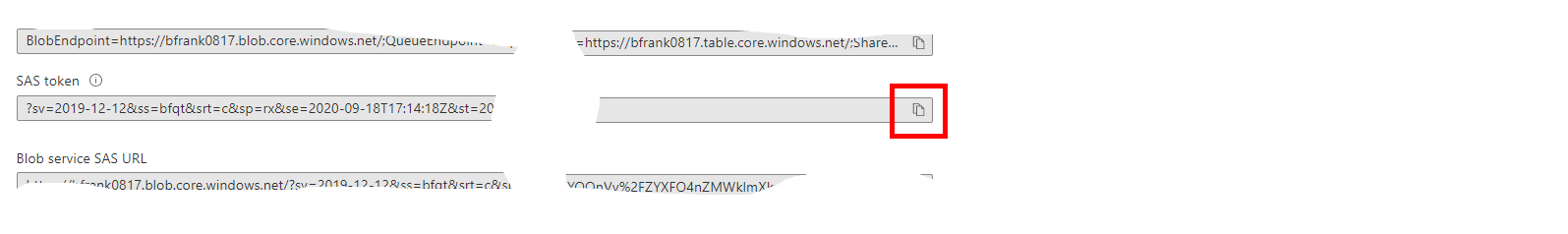

In the result copy the SAS token:

and edit the SAS URL: You need to add the path to uploaded file - it should look similar to:

https://**%Your Storage Account Name%**.blob.core.windows.net/**secured/HelloWorld.txt**? sv=2019-02-02&ss=bfqt&srt=sco&sp=rwdlacup&se=2020-01-26T00:03:42Z&st=2020-01-25T16:03:42Z& spr=https&sig=Pehc....❔ Question: Can you now download the file in the browser [Yes]

TIP

📝 If a SAS:

- is leaked, it can be used by anyone who obtains it, which can potentially compromise your storage account.

- is provided to a client application, expires, the application is unable to retrieve a new SAS from your service, then the application's functionality may be hindered.

Revoke the storage account's access key1 (optional)

[Azure Portal] -> %Your Storage Account% -> Access keys -> key1 -> 'regenerate'❔ Questions:

- Can you still download the file in the browser using above URL [No]

- Why? [Because the SAS key was generated using the previous key1 -> which is no longer valid.]

# Create a stored access policy to control permissions after SAS token is issued

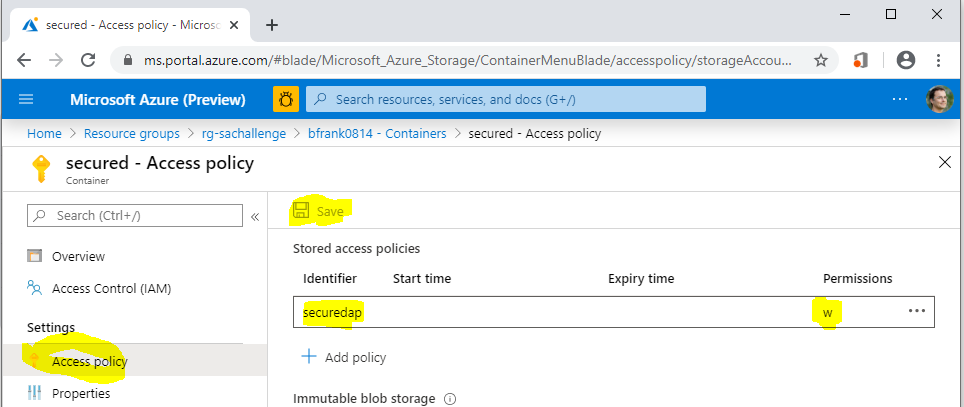

Define a stored access policy ('mypolicy') on the container "secured" (write)

[Azure Portal] -> %Your Storage Account% -> Containers -> 'secured' -> Access policy -> 'Stored access policy' -> '+' Add policyName Value Identifier securedap Permissions Write TIP

📝Don't forget to use the save button!

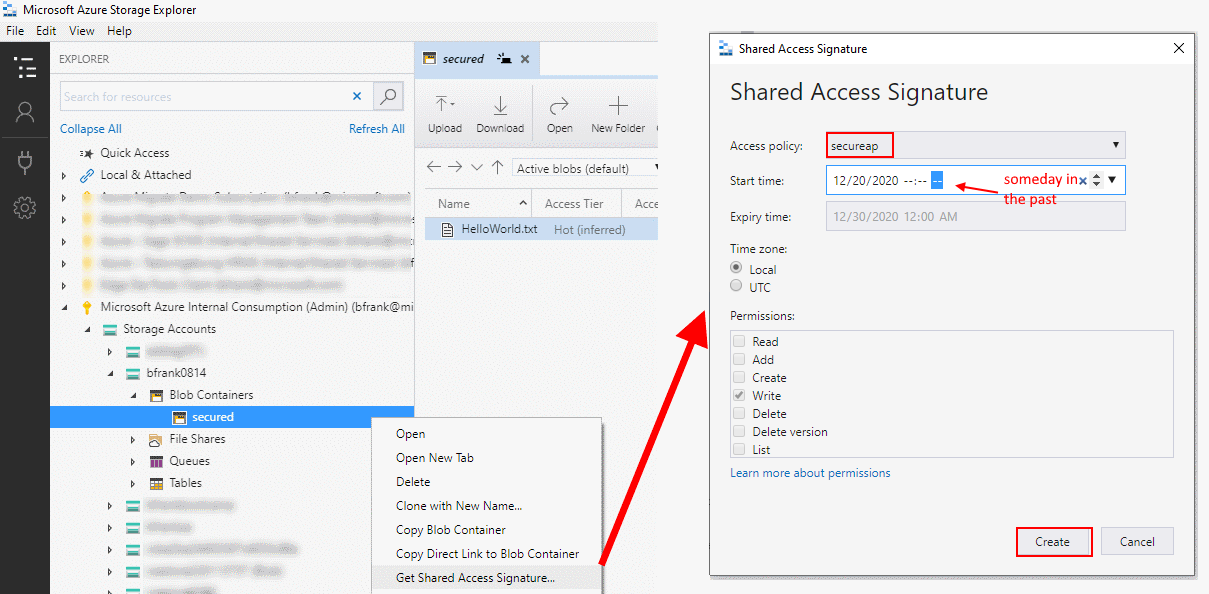

Create a SAS URL that is using this access policy using Azure Storage Explorer

Open [Microsoft Azure Storage Explorer](https://azure.microsoft.com/features/ storage-explorer/) [See Also Day 2: Challenge 0 - Setup your System](https://github.com/ azuredevcollege/trainingdays/blob/master/day2/challenges/00-challenge-setup.md)

Navigate to your storage account and container.

Right click and do 'Get Shared Access Signature' based on our policy.

Copy the URI to the clipboard.

Edit the SAS URI -> add the path to uploaded file

❔ Question: Can you now download the file in the browser? [No]

Edit the stored access policy 'securedap' to allow READ access

[Azure Portal] -> %Your Storage Account% -> Containers -> 'secured' -> Access policy -> Under 'Stored access policy' -> 'securedap' -> '...' -> Edit -> Permissions -> Add READ -> OK -> Save❔ Question: Can you now download the file in the browser? [Yes]

TIP

📝 When you now distribute this SAS URI to users or Apps you can later modify it's behavior by modifying the access policy. So stored access policies can help to modify permissions to a container after the SAS has been issued to users / apps.

# Authorize access to blobs using AAD (optional)

You can also authorize access to storage accounts using Azure Active Directory (AAD) credentials (documentation (opens new window)) which means you assign users RBAC permissions to e.g. a container. This is in fact the recommended way.

However, apps might want to use 'service accounts' as users (aka Service Principals) in Azure. The following PowerShell code creates a Service Principal in AAD. Run this in your cloud shell (How? See challenge 2):

$servicePrincipalName = "myADCServicePrincipal$(get-random -min 100 -max 999)" #must be unique within AAD tenant

#Get your AAD ID

$tenantID = (Get-AzContext).Tenant.Id # e.g. '11111111-1111-1111-1111-111111111111'

$subscriptionID = (Get-AzContext).Subscription.Id # e.g. '22222222-2222-2222-2222-222222222222'

#using Azure CLI is more comfortable to use for creating a Service Principal

$jsonResult = &az ad sp create-for-rbac --name $servicePrincipalName --role 'Contributor' --scopes "/subscriptions/$subscriptionID"

$SPPassword = ($jsonResult | convertfrom-json).password

$SPName = ($jsonResult | convertfrom-json).displayName

Write-Host "You created service principal [$servicePrincipalName] with password [$SPPassword] in tenant [$tenantID] with access to subscription [$subscriptionID]" -ForegroundColor 'Cyan'

Below is some PowerShell Code that simulates your app. It will login to Azure as Service Principal and access the storage account.

Run this in the Cloud Shell:

#Now sign in as this service principal

$passwd = ConvertTo-SecureString $SPPassword -AsPlainText -Force

$spData = Get-AzADApplication -DisplayName $SPName

$pscredential = New-Object System.Management.Automation.PSCredential($spData.AppId, $passwd)

Connect-AzAccount -ServicePrincipal -Credential $pscredential -Tenant $tenantID

Check if the login worked via:

Account SubscriptionName TenantId Environment

------- ---------------- -------- -----------

http://myADCServicePrincipal925 Microsoft Azure ... 72f988bf-........ AzureCloud

If yes - proceed.

You need to give this 'user' or better 'service principal' permissions to your storage account -> container

[Azure Portal]

-> %Your Storage Account%

-> Containers

-> 'secured'

-> Access Control (IAM)

-> Add a role assignment...

Role: e.g. "Storage Blob Data Contributor"

Select: "myADCServicePrincipal..."

Now, let's try to access the file in the container using the service principal.

Note: As you can only use the service principal to log in via the command line (as opposed to the portal), we also have to access the storage account via the command line.

Write-Host "Select the proper storage account" -ForegroundColor 'Blue'

#select your storage account

do {

$saccts = @("")

Get-AzResource -ResourceType 'Microsoft.Storage/storageAccounts' | foreach -Begin { $i = 0 } -Process {

$i++

$saccts += "{0}. {1}" -f $i, $_.Name

} -outvariable menu

$saccts | Format-Wide { $_ } -Column 4 -Force

$r = Read-Host "Select the storage account"

$SA = $saccts[$r].Split()[1]

if ($SA -eq $null) { Write-Host "You must make a valid selection" -ForegroundColor 'Red' }

else {

Write-Host ('Selecting storage account: {0}' -f $saccts[$r]) -ForegroundColor 'Green'

}

}

until ($SA -ne $null)

$ctx = $null

$ctx = New-AzStorageContext -StorageAccountName $SA -UseConnectedAccount

$container = Get-AzStorageContainer -Context $ctx -Name 'secured'

Get-AzStorageBlob -Container $container.Name -Context $ctx

#in case of error - do you have the correct role permissions? (e.g. Storage Blob Data Contributor)

#Get-AzRoleAssignment -ServicePrincipalName $SPName

#Display the content of the first file

$myfiles = Get-AzStorageBlob -Container $container.Name -Context $ctx

$myfile = ($myfiles | Select-Object -First 1).Name

Get-AzStorageBlobContent $myfile -Force -Context $ctx -container $container.Name

Write-Host "the content...." -ForegroundColor 'Cyan'

$content = Get-Content -path $myfile

Write-Host $content -ForegroundColor 'DarkYellow'

'(:'

You now want to delete your service principal? Do this:

#cleanup

Logout-AzAccount -Username $SPName

Read-Host -Prompt "Ready to cleanup? (key)"

$SPDisplayName = ($jsonResult | convertfrom-json).displayName

Remove-AzADApplication -DisplayName $SPDisplayName

# Add an Azure file share to a server

# Prerequisites

Create a VM to attach the file share to if you don't have one. Click on the

button.

button.Note: This deployment will also create a storage account for you.

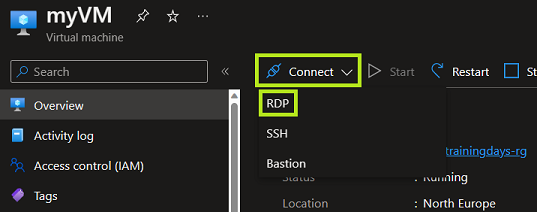

Connect to you VM via the portal:

[Azure Portal] -> Virtual machines -> Your Virtual machine -> Select `Connect` via `RDP` -> Download the RDP file

-> Open the downloaded RDP file on your device -> If Windows tries to connect you via Windows Hello, select 'More choices' and 'Use a different account' as we have to use the credentials you set the VM up with -> Provide the username & password you created the VM with and select 'OK' -> You can disregard the warning that the remote computer's identity cannot be verifiedCreate a file share via the portal:

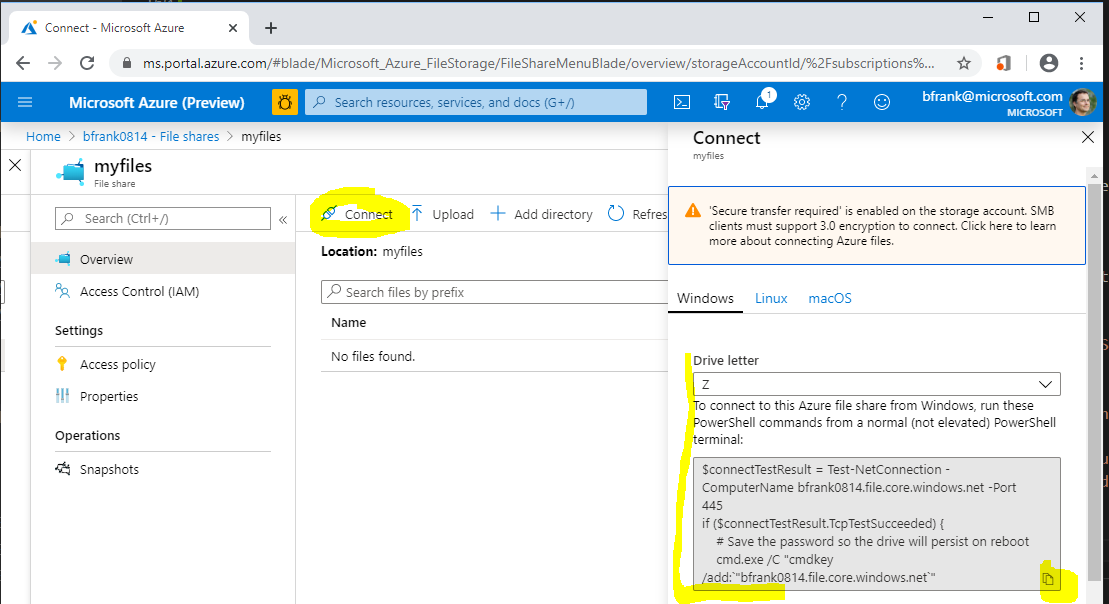

[Azure Portal] -> Storage Account -> File Shares -> "+" File ShareName Value Name myfiles In your Azure VM mount the share as drive by executing the command taken from:

[Azure Portal] -> Storage Account -> File Shares -> 'myfiles' -> Connect -> 'Show Script' -> copy the code into the clipboard

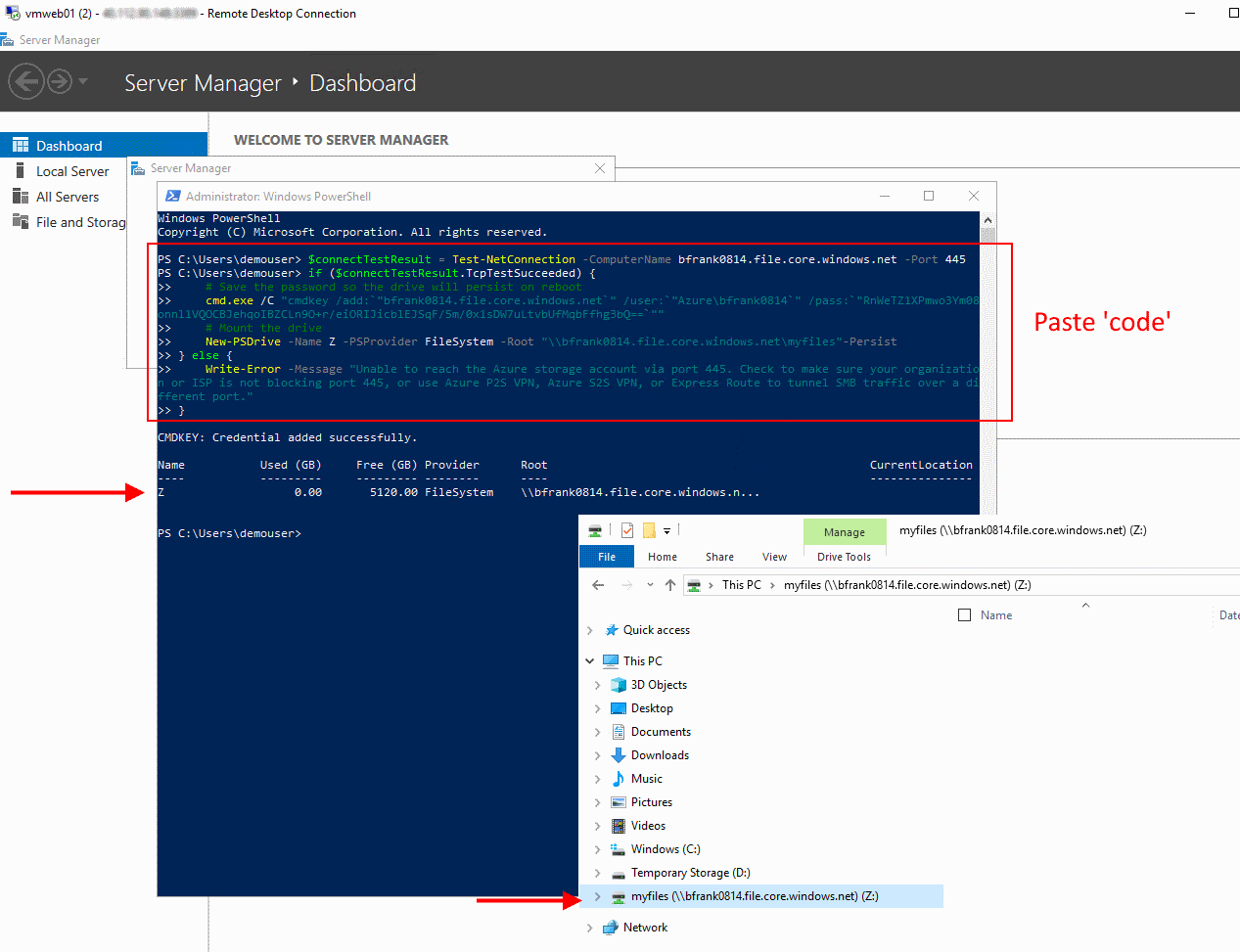

In your VM paste the code into a PowerShell window and execute it. Once successful your 'drive' has been mounted:

❔ Question:

- What is the default quota of an Azure file share? [5TB]

- Which user account is used for establishing the connection? [In this case none, but the storage account key]

- Is the 'drive' available to other users that logon to the VM? [No]

- Is the 'drive' mounted 'automatically' after a reboot? [Yes]

- Can I mount a file share located in e.g. North Europe from a machine located in e.g. West Europe [Yes]

# Cleanup

Delete Resource Group rg-sachallenge